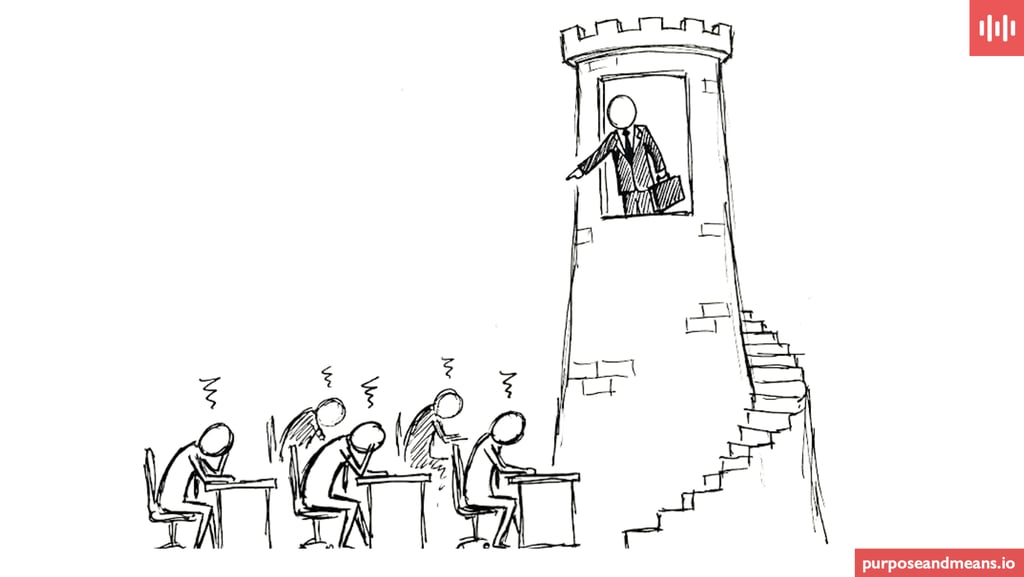

Avoid the ivory tower: Hub-and-Spoke AI governance without exhausting your local colleagues

AI governance is about to be stress-tested by agentic systems, and the companies that hold up won’t be the most centralised, they’ll be the ones that embed oversight locally without burning out their champions.

AIDATA PROTECTION LEADERSHIPGOVERNANCE

Tim Clements

1/9/20263 min read

Normally my posts in this blog spill over to LinkedIn but this one is the other way round. A couple of posts there about decentralised AI accountability have come over here.

For years, I’ve seen decentralised “local stewardship” models show up in information security, risk, and data protection, and now they’re appearing (at speed) in AI governance.

I’ve seen this from both angles: years ago as a local security officer and local risk officer inside a large multinational, and later running global security and data protection programmes across other global companies. You learn quickly what good looks like, and what goes wrong. In fact, my own “data protection champions” model was built directly from those early experiences, and several clients have successfully adapted it in their companies.

I know local champion networks tend to divide opinion. Some people swear by them, others have scars from implementations that quietly failed. My take is, these networks can be powerful, if they’re designed properly and tended to afterwards.

A public-sector signal worth paying attention to

Recently I came across the Texas Department of Transportation (TxDOT) AI Strategic Plan 2025–2027 and one element stood out: instead of trying to control every algorithm from a central HQ, TxDOT is building an “AI Champion Network.”

It’s essentially the classic Hub-and-Spoke model:

The Hub: sets strategy, standards, guardrails, safety expectations

The Spokes: embedded “AI Champions” in districts/business units who own execution locally

There’s a bigger lesson here than “government does governance too.” It suggests that companies likely to succeed in the next 12–24 months won’t be the ones with the biggest compliance departments, they’ll be the ones that can weave governance into daily operations through embedded networks. So, if a government agency can move beyond an “Ivory Tower” governance model, there’s a good chance your company can too.

Your local colleagues are not resisting change, they are tired out

After sharing these thoughts in my initial Linkedin post on the topic, a few comments triggered a further (and important) reflection: the risk of “champion fatigue.”

When these networks struggle, it’s often framed as resistance, as if local colleagues are unwilling. But more often, it’s simply overload: people burning out under the weight of extra compliance tasks that got piled onto an already full role, and I think that distinction changes everything.

If the problem is overload, the solution isn’t more pressure. It has to be better design.

Because governance shouldn’t become a bottleneck at HQ, but it also can’t become a burden dumped onto the frontline (the first line of defence).

Why “local champions with checklists” won’t be enough for what’s coming

Many companies are moving from GenAI (systems that speak) to Agentic AI (systems that act).

When AI starts executing transactions, spending money, triggering processes, or writing and deploying code, governance shifts from “is this content acceptable?” to “is this system operating safely and as intended, end-to-end?”

In other words: a local champion armed with a checklist will struggle to provide what’s really needed.

In a trends radar I began late last year - AI Governance: 2026 and Beyond - two trends came through particularly strongly:

Agentic AI governance: a shift from content moderation to operational oversight

Decentralised accountability: the need for embedded risk owners, not just central policies

I’ve seen external signals that reinforce these directions, including:

A WWT report pointing to “Ambassador Networks” as critical infrastructure in banking

Both are worth reading if you’re designing (or redesigning) your local AI governance network.

The design challenge: embed governance and reduce friction

So the question isn’t “Should we decentralise governance?” It’s:

How do we embed accountability locally without turning people into part-time compliance administrators?

How do we make the hub strong enough to provide clarity and safety, but light enough not to block delivery?

How do we design governance for systems that act, not just tools that generate text?

Done well, hub-and-spoke governance can scale organisational competence as well as oversight.

And that’s where I think the next wave of AI (and data protection) maturity will come from: not more centralised control, but better-designed networks that make governance part of how work gets done, without burning out the people we rely on.

Purpose and Means is a niche data protection and GRC consultancy based in Copenhagen but operating globally. We work with global corporations providing services with flexibility and a slightly different approach to the larger consultancies. We have the agility to adjust and change as your plans change. Take a look at some of our client cases to get sense of what we do.

We are experienced in working with data protection leaders and their teams in addressing troubled projects, programmes and functions. Feel free to book a call if you wish to hear more about how we can help you improve your work.

Purpose and Means

Purpose and Means believes the business world is better when companies establish trust through impeccable governance.

BaseD in Copenhagen, OPerating Globally

tc@purposeandmeans.io

© 2026. All rights reserved.